Deep Learning Notes | Deep CNN: ResNets

Deep CNN - ResNets

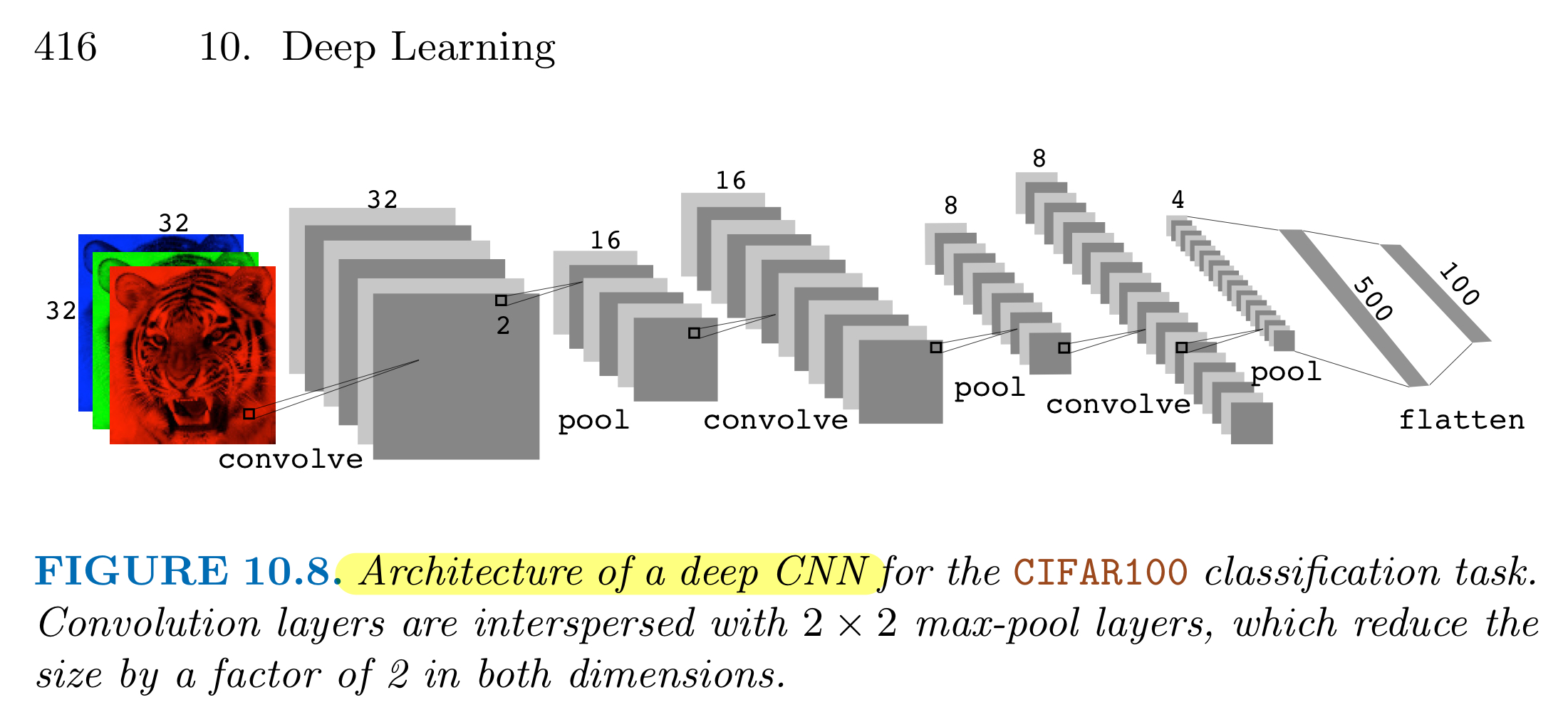

1. Deep Convolutional Neural Networks

In recent years, neural networks have become much deeper, with state-of-the-art networks evolving from having just a few layers (e.g., AlexNet) to over a hundred layers.

The main benefit of a very deep network is that it can represent very complex functions. It can also learn features at many different levels of abstraction, from edges (at the shallower layers, closer to the input) to very complex features (at the deeper layers, closer to the output).

Problems:

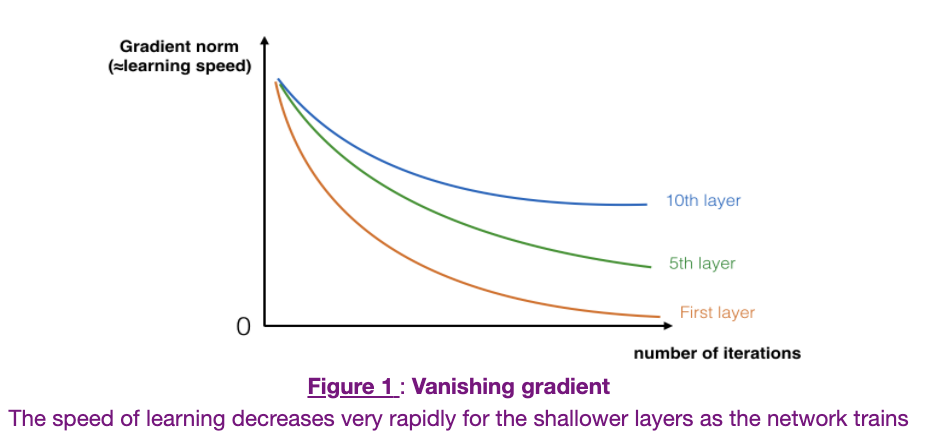

Vanishing Gradients: very deep networks often have a gradient signal that goes to zero quickly, thus making gradient descent prohibitively slow.

- during gradient descent, as networks backpropagate from the final layer back to the first layer, multiplying by the weight matrix on each step, and thus the gradient can decrease exponentially quickly to zero

- or, in rare cases, grow exponentially quickly and “explode,” from gaining very large values.

- during training, the magnitude (or norm) of the gradient for the shallower layers decrease to zero very rapidly as training proceeds, as shown below:

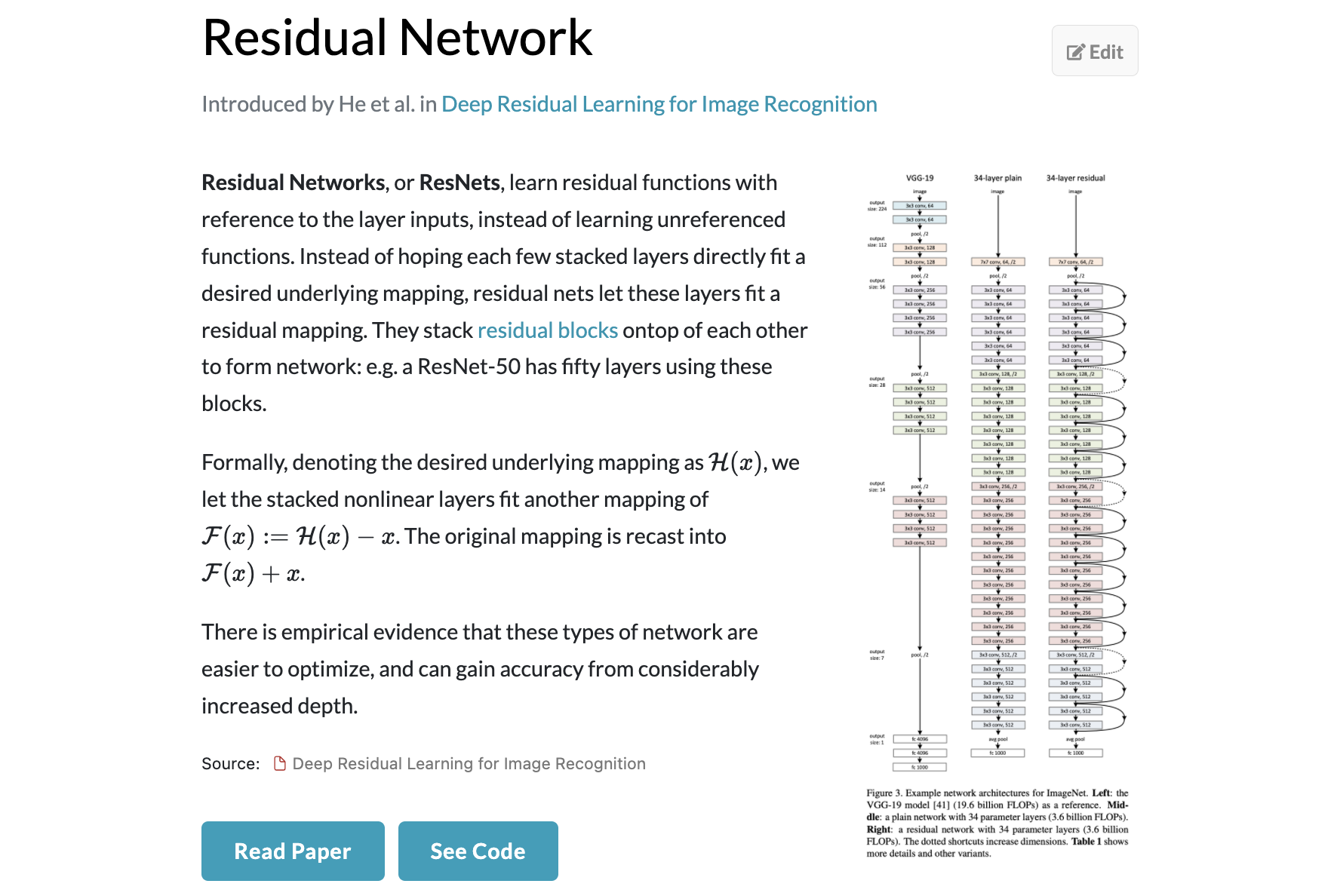

Solution: Residual Network!

2. Build a Residual Network ()

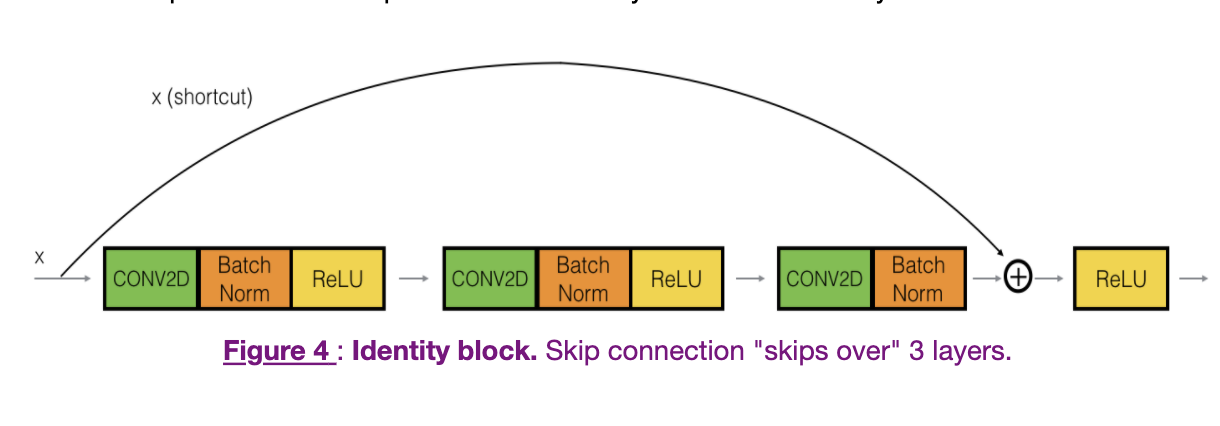

2.1. The Identity Block

The identity block is the standard block used in ResNets, and corresponds to the case where the input activation has the same dimension as the output activation.

- The upper path is the “shortcut path.”

- The lower path is the “main path.”

- To speed up training, a BatchNorm step has been added to CONV2D and ReLU steps in each layer

- Having ResNet blocks with the shortcut makes it easy for one of the blocks to learn an identity function

|

|

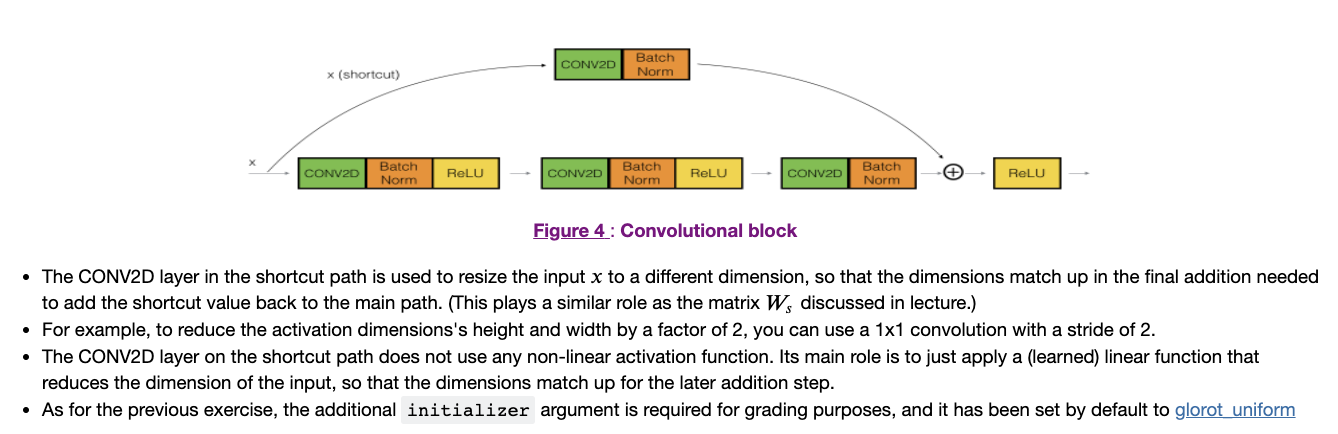

2.2. The Convolutional Block

The ResNet Convolutional Block is the second block type, which is used when the input and output dimensions don’t match up.

- The difference with the identity block is that there is a CONV2D layer in the shortcut path:

|

|

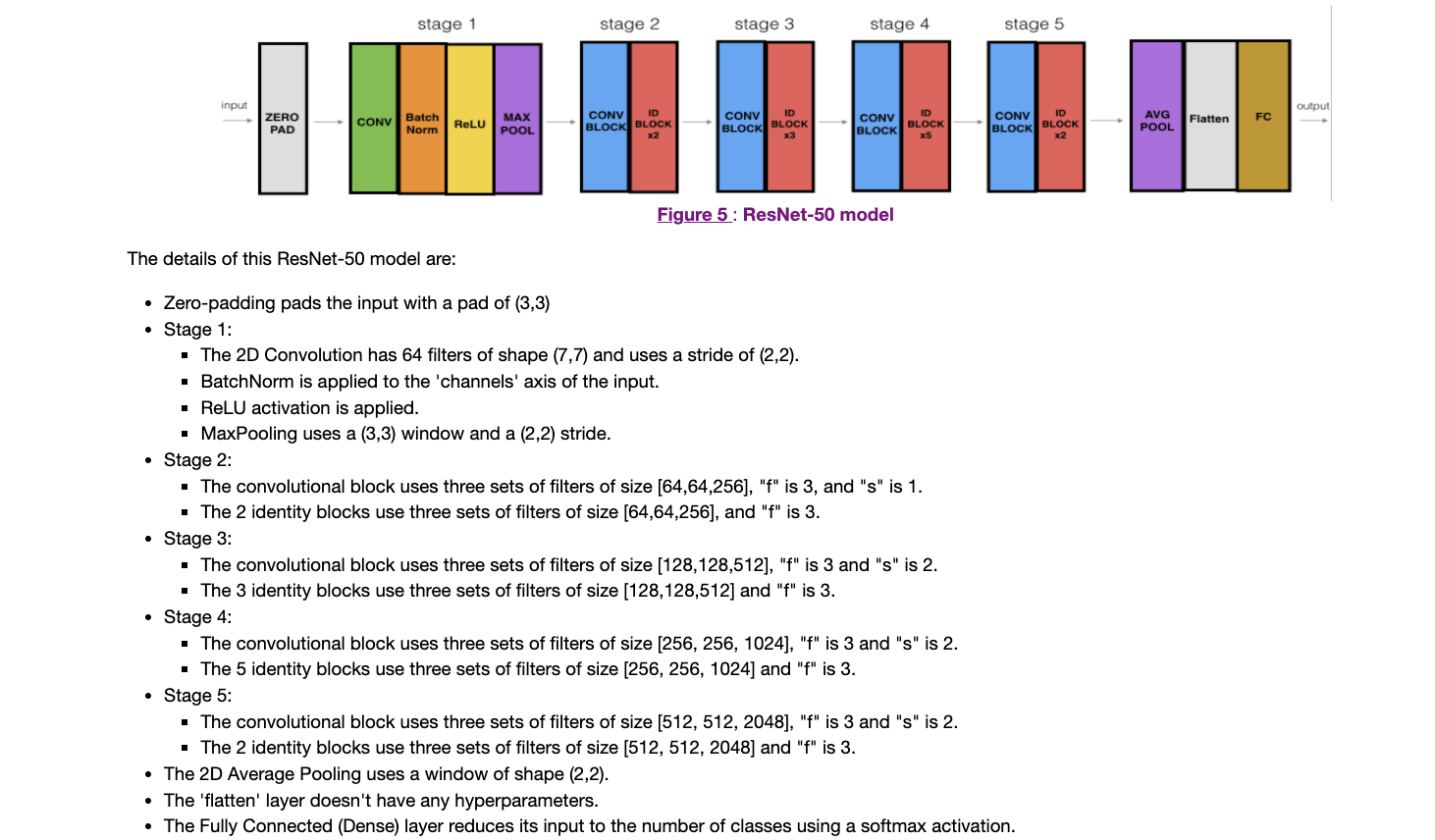

3. ResNet-50 Model

|

|

Summary:

- Total params: 23,600,006

- Trainable params: 23,546,886

- Non-trainable params: 53,120

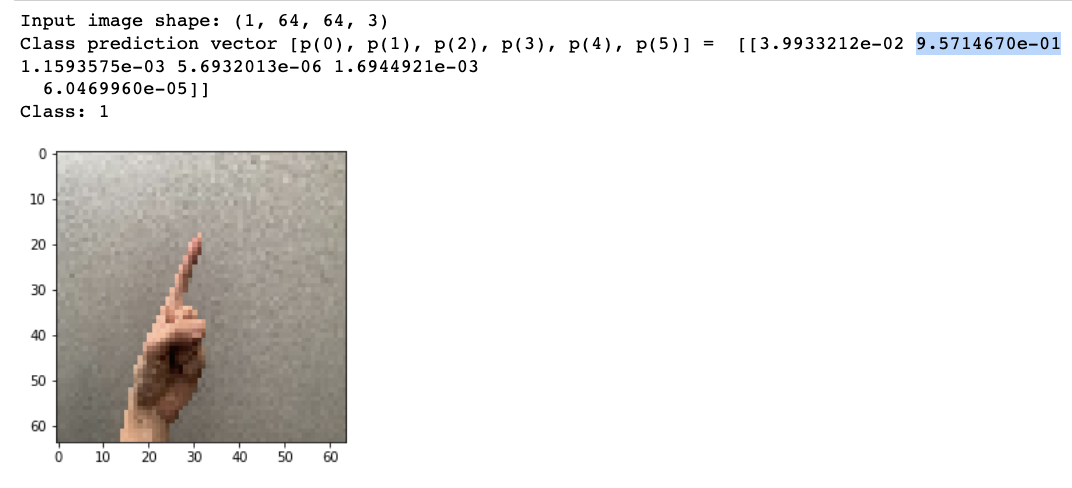

4. Use pre-trained model and classify images

|

|

Output:

Load Packages

|

|